What’s all the Hype with Transformers? The Trouble with Natural Language Processing

Contents

Introduction

This post tries to highlight the practical differences between traditional RNNs and the new Transformer Architecture. We are not going to dive too deeply into the mathematical details but rather focus on the practical implications of the new architecture in regards to the application of Transformer Models. If you want a mathematical deep dive, I highly recommend watching 3blue1brown’s video series on Neural Networks and Deep Learning.

So, with that out of the way, let’s get started!

In the last few years, the world of software development has been shaken by the rise of new and powerful AI models, particularly in the field of Natural Language Processing (NLP) and Conversational AI. It is safe to assume that you, dear reader, have heard the term „Transformer“ on repeat for the last year. It is practically impossible to navigate the internet without stumbling upon an article talking about AI (this one included 😉) or to not be hit by advertisements for applications, hardware, platforms, or services that boast the use of AI in some form or another. That being said, what is all the hype about? What is a Transformer? Why are AIs so powerful all of a sudden?

And most importantly, why should you care?

The Troubles with Natural Language Processing

At the core of computer science lies the idea of processing information. For the longest time in human history, the information we processed and stored as human beings was transmitted and stored in the form of language. First, as spoken language, passed on from one generation to the next in the form of stories, then we invented writing, which allowed us to store that language encoded in the form of letters in books. Very recently in our overall history, we’ve started dabbling with computers, a rock infused with lightning, which can store information and process it in ways we could never have imagined.

Throughout our long history as a species, we have started to catalog and categorize the information we store and pass on. We discovered that since language carries information, it has to do so in a structured manner. This, in turn, led us to develop rules and structures for our language.

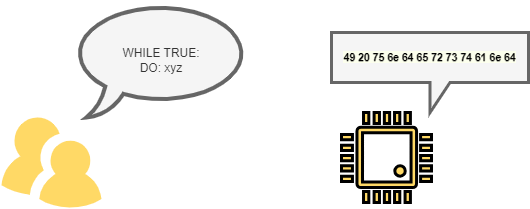

Since we now have very sophisticated tools, computers, for processing structured data, such as numbers, it was only a small step before we figured out that since language follows a set of rules just like math does, maybe we could use these tools to process language as well.

The results of this endeavor are something you can see every day. For example, the very webpage on which you are reading this article right now. It has been written in a subset of the English language, a programming language, which has been processed by a computer following a set of rules, which then produced the webpage you are reading right now.

But there is something missing here. The language we use to communicate with computers is very different from the language we use to communicate with each other. The language we use to communicate with computers has a comparatively simple structure and syntax, while the language we use to communicate with each other is much more complex and nuanced.

Wouldn’t it be great if we had some kind of mechanism or formula that we could apply to naturally spoken language, which would allow us to, for example, translate a sentence from one language to another automatically or create the HTML code for a webpage from a natural language description of the webpage?

We know it ought to be possible that language is just a set of rules, right?

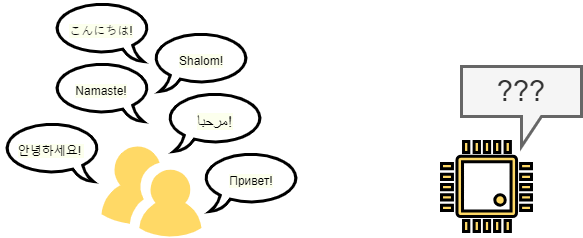

This is where the big field of Natural Language Processing (NLP) comes in. NLP is the field of computer science that deals with the interaction between computers and humans using natural language. This field is mainly dominated by endeavors to create AIs that are able to ingest highly complex data in the form of human language and produce some kind of meaningful output automatically.

For a long time, progress in this field was slow. The main reason for this was the fact that the structure of natural language is very complex and nuanced and that the rules of natural language are not as clear-cut as the rules of a programming language.

When we are dealing with text or spoken language, we are always dealing with sequential data. This means that the order of the tokens in a given input sequence holds valuable information, which our AI models need to understand in order to perform well.

An AI model is, at its core, a function that takes an input and then produces a prediction based on that input. In the case of NLP, the input is a sequence of tokens (words, characters, etc.) and the output is a prediction about the most likely next token, or the sentiment of the text, or the intent of the speaker, etc. That means that every AI model dealing with natural language has to be able to capture the importance of the order of the tokens in the input sequence.

To visualize this, let’s consider the following two sentences:

- „The cat sat on the mat.“

- „The mat sat on the cat.“

The first sentence makes perfect sense, a cat is sitting on a mat. The second sentence, however, is nonsensical, as a mat cannot sit on a cat. The information that is being carried by a sentence is not only in the words themselves but mostly in how those words are arranged in the sentence. This simple example shows how the order of the tokens in a sentence can completely change its meaning. As humans, we can rely on our powerful brains to recognize the importance of the order of the tokens in a sentence, as we have brains that are specifically designed to process language. We can easily recognize the implied information in the sentence, which arises from the order of the tokens. Computers, however, are not so lucky. And here is where the trouble begins.

Traditional Neural Networks

Early on, we concluded that we could try building mathematical models that mimic the workings of the human brain, which seems to be very good at processing language. This led to the development of the field of Artificial Neural Networks (ANNs), which are models (think highly complex statistical functions) that are able to process data in a way that is similar to the way the human brain processes data.

A „classic“ neural network consists of three conceptual components:

- An input layer that contains neurons that are being fed a part of the input data.

- One or more hidden layers that contain neurons that perform computations on the input data.

- An output layer that contains neurons that produce the final prediction.

At its core, a neuron is simply a mathematical function, for example, a linear function, which takes an input and produces an output. To mimic the workings of a biological brain, AIs are built by connecting these neurons together in a network, allowing the neurons to pass their output to other neurons in the network before finally producing the final prediction. (This is all a gross oversimplification, completely ignoring the actual math, but it should give you a rough idea of how a neural network works). Here you can see the (simplified) innards of one such neuron:

As you can see, the neuron takes an input (or even multiple), applies a mathematical function to it, and then produces an output. This mathematical function can be freely chosen from a variety of functions, which we will not go into here. What matters is that the neuron is able to process the input data and produce an output, which can then be passed on to other neurons in the network. The main feature of neural networks is that the weights (here called w) and the bias (here called b) of the function that the neuron applies to the input data are learned during the training of the model. Once we have a single neuron figured out, we can then connect multiple neurons together to form a network, which can then process data in a more complex way.

These networks can be built to deal with a wide variety of data, such as images, sound, or text. As long as you can represent the input data as a series of numbers, you can construct a neural network to process that data.

The idea is that you dump some input data into the input layer, have it processed by the „deeper“ or „hidden“ layers, and then look at the outputs of the neurons in the output layer to see what the neural network „thinks“ of the data.

For example, if I were to construct a neural network for weather prediction, I would feed the network a series of numbers representing temperature, humidity, and air pressure and then look at the output of the network. There could be a neuron that produces the likelihood of rain, another neuron that produces the likelihood of sunshine, etc.

So, my hypothetical neural network would tell me that the output neuron for rain produces the value 0.8, while the neuron for sunshine produces the value 0.2, which would mean that the network thinks that there is an 80% chance of rain and a 20% chance of sunshine.

However, there is a problem with this approach when it comes to processing sequential data. (Remember, natural language is sequential data and the order of the tokens in a sequence is important.) Look at the following illustration of the architecture of a traditional neural network being fed the sentence „The cat sat on the“ in the hopes of predicting the next token in the sequence (we want the model to predict the next token in the sequence, which should be „mat.“):

As you can see, the input data is fed into the input layer, and then it is passed through the hidden layers where the computations are performed. Finally, the output layer produces the final prediction.

Can you spot the problem? The input neurons are isolated from each other, meaning that the model does not „see“ the order of the tokens in the input sequence, since each individual neuron is being „fed“ only one token from the input sequence without any information about the other tokens. To the model from our example, the sentences „The cat sat on the mat.“ and „The mat sat on the cat.“ are identical, meaning that the model would produce the same prediction for both sentences. This is because the model is unable to capture any information about the order of the tokens in the input sequence.

Think of it this way: In mathematics, the equation 2 + 3 is the same as 3 + 2, because the order of the numbers does not matter. In natural language, however, the order of the tokens in a sequence is one of the most important aspects of the sequence, carrying significant information about the meaning of the sequence. If our model is unable to capture this’meta‘-information, then it is unable to properly process the largest chunk of information that is being carried by the input sequence and can therefore not produce any meaningful predictions, no matter how hard we train it. It’s as if our model is trying to solve a puzzle without being able to see the pieces.

This, of course, is not ideal, so researchers came up with new architectures that would allow the model to capture information about the order of the tokens in the input sequence.

Recurrent Neural Networks

The first innovation was the Recurrent Neural Network (RNN). RNNs are the traditional architecture for dealing with sequential data and forecasting sequences. A classic use case for RNNs is the translation of a sentence from one language to another. To do this more effectively, the model needs to understand the order of the tokens in the input sequence. This is achieved by having the neurons of the RNN feed each other the output of the previous neuron. A simple illustration of this architecture is shown below:

As you can see, the output of the first neuron is fed into the second neuron, and so on. This has the effect that the previous token in a sequence influences the prediction of the next token. This way, information about the order of the tokens can be considered in the internal computations of the model, allowing this positional information to influence the calculation of the final prediction. Our model finally has a way to „see“ the pieces of the puzzle.

This is certainly a step in the right direction, but the mechanism has some very serious limitations, which we will discuss in the next part of this series, as well as some of the solutions that have been proposed to overcome these limitations.

Outlook

In the next part of this series, we are going to take a look at how the limitations and drawbacks of Recurrent Neural Networks can be overcome and mitigated. We are also going to take a look at the reasons why the Transformer Architecture has been introduced to the field of Natural Language Processing as well as what it does differently compared to traditional approaches.

If you want to find out more, get in touch with us.